Previous Experience

Internships and Roles

Summer 2023 |

San Diego, CA

Software Engineering Intern, Amazon

Composing data at scale to prevent platform abuse.

Platform Abuse is a $300M+ problem. Each day, 9-10 million orders are

processed through our machine learning systems to detect fraud

patterns. My team owned an ingestion pipeline to transform and vend

data to machine learning and data visualization teams.

Platform Abuse is a $300M+ problem. Each day, 9-10 million orders are

processed through our machine learning systems to detect fraud

patterns. My team owned an ingestion pipeline to transform and vend

data to machine learning and data visualization teams.

I learned to write scalable and maintainable code. Developers must master both the pen that designs and the sword that executes. I identified an optimization that resulted in a 8x reduction in API calls to data provider teams through asynchronous call batching.

I worked on safely migrating endpoints our core API from Java to a Federated GraphQL solution. We used Typescript and deployed using CDK (IaC) on a full suite of AWS services (EC2, Lambda, S3, RDS, Cognito, IAM, etc).

Consider reading this article on Netflix's GraphQL migration to get an idea of the problem.

Fall 2023 |

Denver, CO

Software Engineering Intern, Aurora Insight

Terrestrial sensing of quadrature signals.

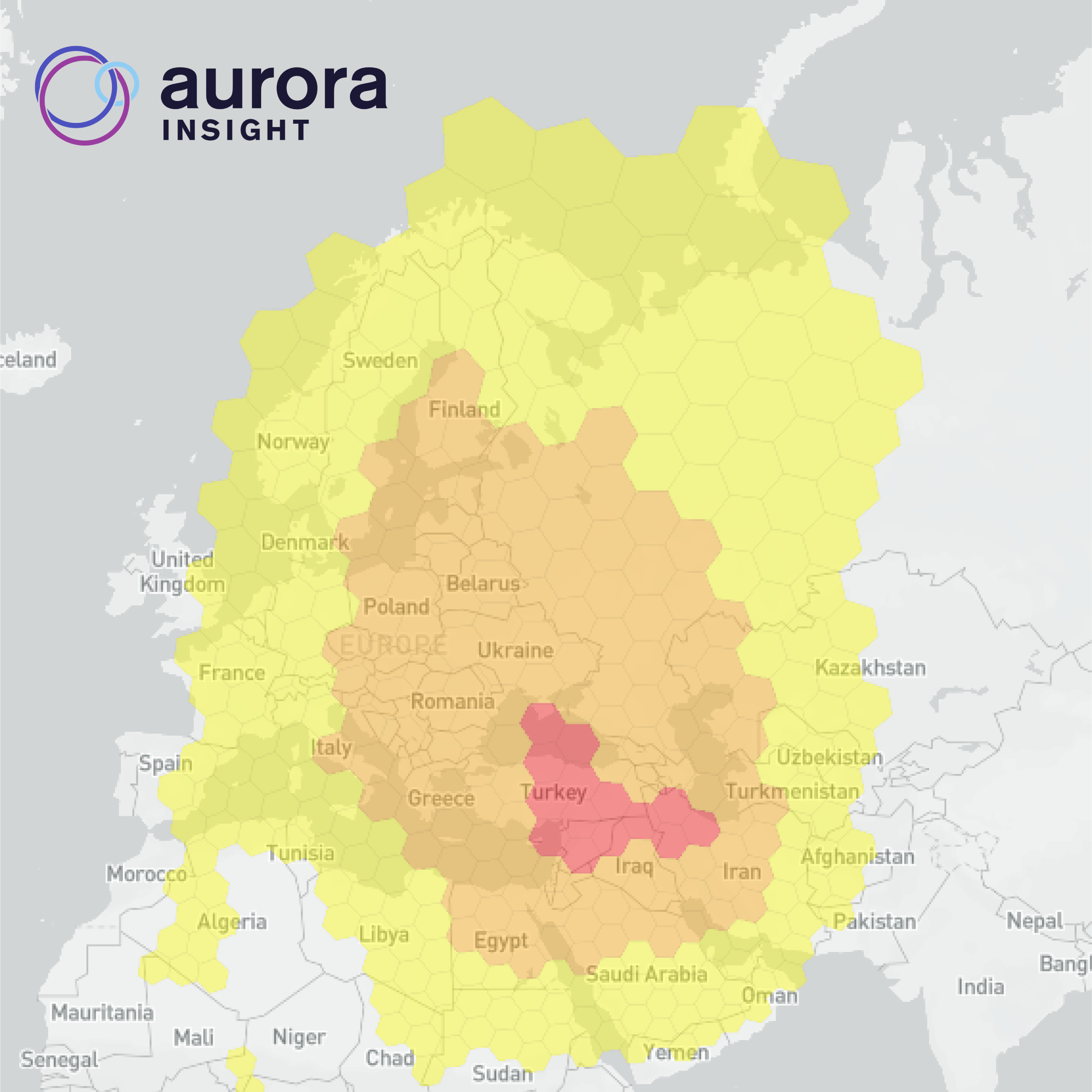

We analyze Petabytes of RF data to provide insights for Government and Commercial use. From detecting GNSS interference at the outset of the Russian invasion of Ukraine from space to deploying terrestrial sensors on Earth to map the 5G for telecommunications.

Working at a startup requires broad ownership and moving fast. We had started connecting our sensors to the internet to remove a need for an on-site operator. I built the entire end-to-end portal to monitor, sample, and control our entire terristrial sensors remotely.

I used a ReactJS frontend, FastAPI Python backend, AWS RDS database and S3 Object Store. I used Server-Sent Events on board the sensors run on Linux Daemons to transmit updates. The finished portal was deployed on Docker.

Winter 2022 |

Remote, NH

Software Engineering Intern, TomTom

Obtaining real time map updates.

TomTom provides real-time mapping data for companies such as Uber, Microsoft, and Volkswagen. Map condition alerts are a result of user reporting and scraping data at scale. My team worked at the top of a data pipeline to obtain new leads for real-time road condition changes.

We ingested unstructured text data into a number of Apache Kafka topics. Apache Spark was used for NLP and data transformations with the embedded geo-metadata. Processed leads were stored in downstream databases.

I wrote Python based feedback loops to reconfigure our ingestion streams to handle peak and trough periods. Parameters attached to ingestion streams were changed based on downstream outputs to handle a spiky load case.